How to Resolve Blocked by Robots.txt Issue in GSC

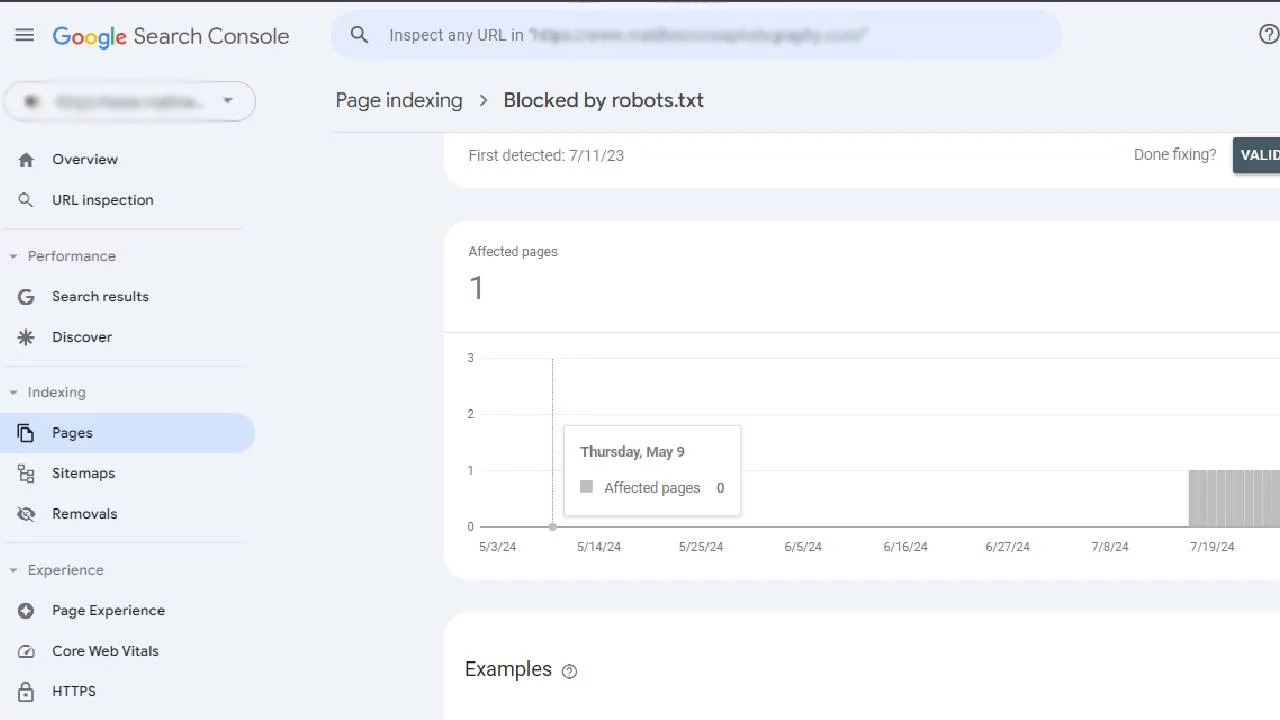

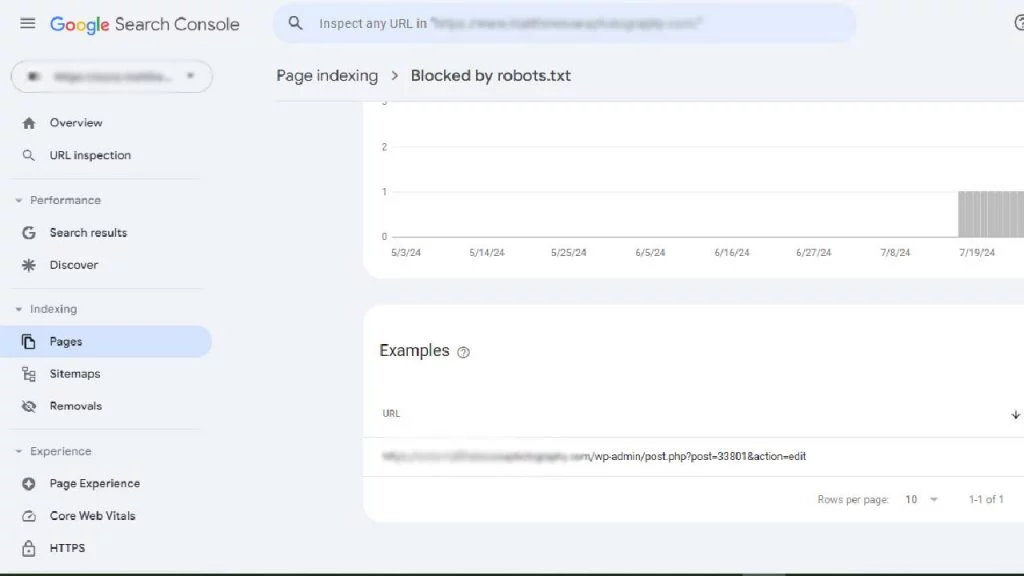

Encountering a “Blocked by robots.txt” warning in Google Search Console indicates that your site’s robots.txt file is preventing Googlebot from accessing certain URLs, which could negatively affect your site’s search visibility.

Table of Contents

ToggleUnderstanding Robots.txt

The robots.txt file plays a critical role in directing search engine crawlers on how to navigate your website, ensuring that only the most relevant content is indexed.

- Location: The robots.txt file is found at the root of your website.

- Purpose: It provides instructions to search engine crawlers, such as Googlebot, about which pages they are allowed or restricted from accessing.

- Commands: It uses directives like “User-agent,” “Disallow,” and “Allow” to control crawler behavior.

When to Block or Unblock URLs

Blocking certain URLs can be beneficial, particularly for:

- Dynamic URLs: URLs with parameters related to search filters, product variations, or other non-static content.

- User-Specific URLs: Pages tied to user accounts, shopping carts, or checkout processes, which don’t need to be indexed.

However, unintentionally blocking important URLs can reduce your site’s visibility in search results.

Steps to Fix the “Blocked by Robots.txt” Issue

If your website is experiencing the “Blocked by robots.txt” issue in Google Search Console, following these steps can help you resolve it and restore your site’s search visibility.

1. Identify Blocked URLs

- In Google Search Console, navigate to the “Index Coverage” report to find URLs that are blocked by robots.txt.

- Assess whether these URLs should indeed be accessible to Googlebot.

2. Edit Your Robots.txt File

- Access the robots.txt file through your website’s CMS or an FTP client.

- Ensure you make a backup of the original file before making any changes.

3. Review and Modify the File

- Look for any directives that are blocking Googlebot from the URLs in question.

- Adjust these directives as needed. For example, you might change Disallow: /products/ to Disallow: /products/sale/ to allow Googlebot to crawl other product pages.

- Be mindful of correct syntax and avoid excessive use of wildcard characters.

4. Test Your Changes

- Use a robots.txt testing tool to ensure your modifications work as intended.

- Verify that Googlebot can now access the previously blocked URLs.

5. Update and Submit the File

- Save your revised robots.txt file to your website’s root directory.

- Allow time for Googlebot to re-crawl the site and reflect these changes in the index.

Additional Recommendations

To maintain optimal search visibility and crawler efficiency, consider these additional recommendations when managing your robots.txt file.

- Be Specific: Use precise directives to manage access to particular pages or directories.

- Avoid Overblocking: Blocking too many pages can harm your search visibility.

- Use “Allow” Directives: Where necessary, explicitly permit access to certain pages.

- Submit a Sitemap: Use Google Search Console to submit a sitemap, prioritizing important pages.

- Monitor Regularly: Keep your robots.txt file and Google Search Console under regular review to ensure everything is functioning correctly.

Sample Robots.txt File

Here’s an example of a robots.txt file that allows Googlebot to access all pages except those within the “/admin/” and “/checkout/” directories, while explicitly allowing access to images:

Finally

Before altering your robots.txt file, carefully evaluate your website’s structure and objectives. Incorrect modifications can lead to unintended consequences. By following these steps, you can effectively resolve the “Blocked by robots.txt” issue and enhance your site’s visibility in search results.

Author

We are a digital marketing agency with over 17 years of experience and a proven track record of helping businesses succeed. Our expertise spans businesses of all sizes, enabling them to grow their online presence and connect with new customers effectively. In addition to offering services like consulting, SEO, social media marketing, web design, and web development, we pride ourselves on conducting thorough research on top companies and various industries. We compile this research into actionable insights and share it with our readers, providing valuable information in one convenient place rather than requiring them to visit multiple websites. As a team of passionate and experienced digital marketers, we are committed to helping businesses thrive and empowering our readers with knowledge and strategies for success.

View all posts