How to Fix Blocked Due to Unauthorized Request (401) in GSC

Finding a “Blocked due to unauthorized request (401)” error in Google Search Console might be alarming. This warning suggests that certain pages on your website are being restricted from Googlebot, the search engine’s crawler, access. This obstruction could affect the correct indexing and ranking of your site, thereby influencing your search traffic. This article will go over what this mistake entails and how to fix it in many situations.

Table of Contents

ToggleWhat Does the 401 Error Mean?

Googlebot being prohibited access to certain pages on your site results in a “Blocked due to unauthorized request (401)”. error. There are various reasons this could occur, including:

- Password Protection: Pages access calls for a password.

- IP Restrictions: Googlebot’s IP address among others are restricted.

- Problems of Verification: Issues with the login mechanism of the site stop Googlebot from reaching pages.

Scenario # 1: Correcting Unintentional Restrictions

Sometimes inadvertent Googlebot limitations lead to a block due to unauthorized request 401 error. Here’s how to correct this:

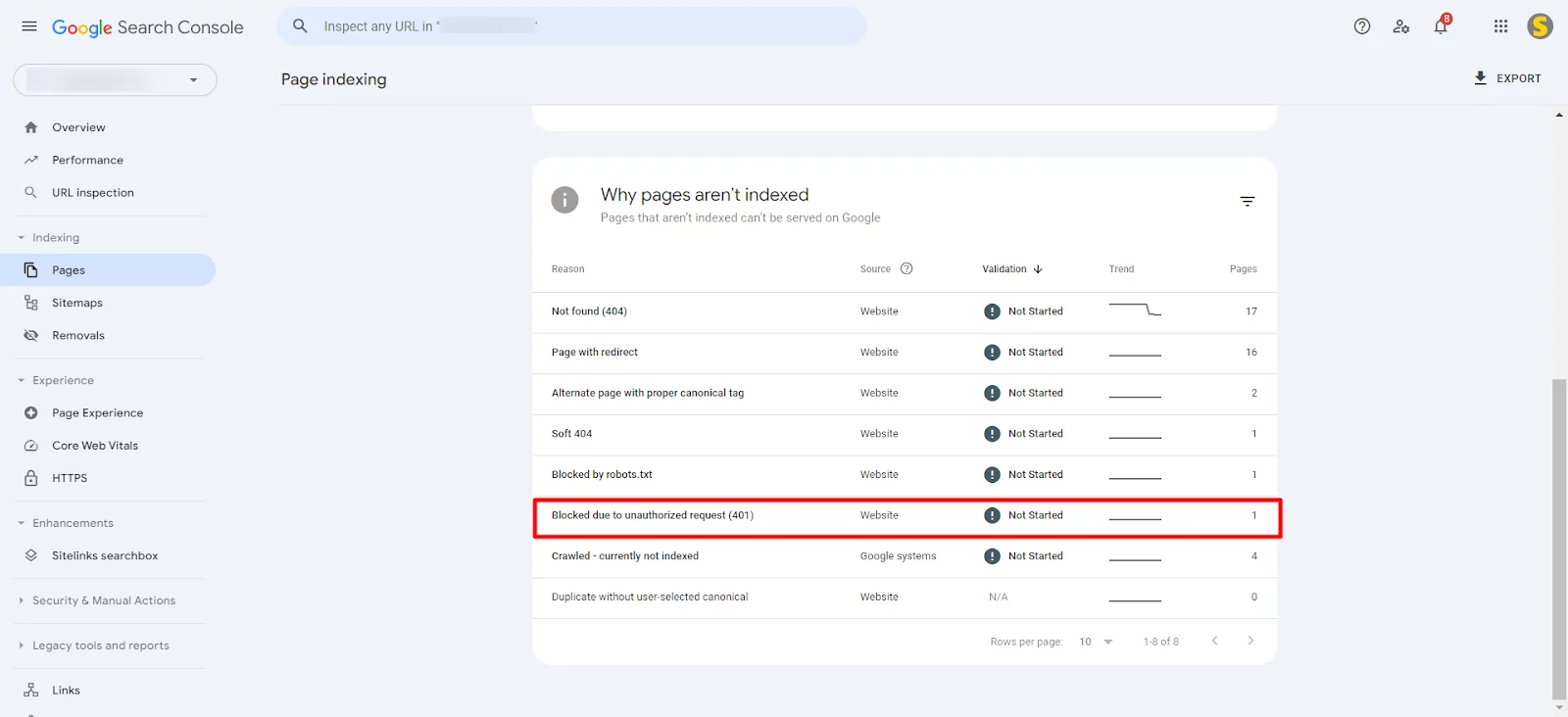

Step 1: Note the Blocked URLs

Look for the sites with the 401 error starting with Google Search Console. Locate the impacted URLs by using the “Coverage” report.

Step 2: Examine Password Protection

See if a password locks these pages. Think about eliminating password security if these pages provide valuable material that needs to be indexed.

Step 3: Review IP Limits

Make sure that the IP addresses of Googlebot are not blacklisted. Google’s official literature has a list of Googlebot’s IP ranges; ensure these IPs are permitted in your server settings by consulting this page.

Step 4: Address Authentication Problems

Look over the authentication mechanism on your website. Make sure Googlebot can go over any login constraints. You may have to change server setup or settings in your CMS, Content Management System.

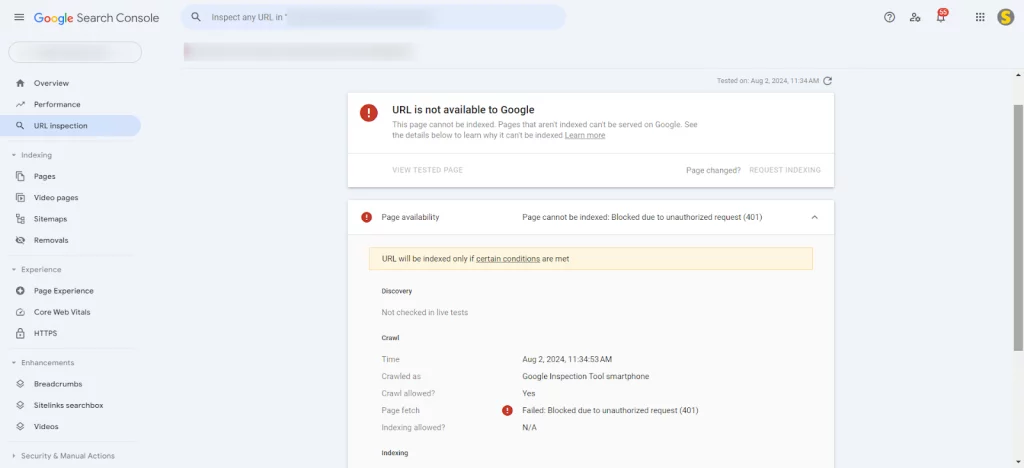

Step 5: Check Your Improvements

Test the live URLs using the Google Search Console’s URL Inspection tool. This will verify if Googlebot may now reach the sites without running into a 401 error.

Start Building Your SEO-Optimized Website With Us!

Scenario # 2: Purposeful Page Restrain

Sometimes you may want to stop Googlebot from seeing private material or admin portions of your website. Here is how one may control these limitations:

Step 1: Create or Edit the robots.txt File

Create a robots.txt file in the root directory of your website should it not already have one. Should you already have one, add the required Disallow rules.

Step 2: Add Disallow Directives

List which URLs or folders Googlebot should not access. As a matter of fact:

User-agent: Googlebot

Disallow: /admin/

Disallow: /private-page/

Googlebot is informed not to crawl the designated areas of your website by this.

Step 3: Verify the robots.txt File

Use the Google Search Console’s robots.txt Tester tool to make sure that Googlebot is being limited as intended and that your instructions are followed precisely.

Step 4: Track the Coverage Report

Check the Coverage report in Google Search Console often to make sure the limited pages are not displaying 401 errors anymore.

Looking For an Expert SEO Services?

Scenario # 3: Managing Content Behind Paywalls or Restricted Access

If you have material behind paywalls or limited access, you could still like Google to index it without disclosing the actual content. Here’s how to manage it:

Step 1: Implement Schema Markup

Tell Google the material is behind a paywall using the Paywalled material tag available on schema.org. Without complete access, this enables Google to better grasp the nature of the information.

Step 2: Apply Noindex Meta Tags

Add the noindex meta element in the HTML head section for sites you want not indexed:

<meta name=”robots” content=”noindex”>

This enables Googlebot to identify the page but instructs it not to index it.

Step 3: Update and Submit Sitemaps

Make sure your XML sitemap reflects which pages need to be indexed by being current with all relevant URLs. Send the sitemap to Google Search Console so they may better grasp the architecture of your website.

Step 4: Test and Monitor

See how Googlebot sees the sites with the URL Inspection tool. Track the Coverage report often to make sure the 401 mistakes are fixed and your limited content is under control.

Finally

Maintaining a healthy website and guaranteeing decent search visibility depend on fixing “Blocked due to unauthorized request (401)” problems in Google Search Console. Finding the underlying problem and implementing the right solutions will help to guarantee Googlebot’s accurate access and indexing of your material.

- Fix Unintentional Restrictions: Review and change password protection, IP limitations, and authentication techniques to fix unintentional limits so Googlebot may access critical material.

- Intentionally Restrict Pages: Use robots.txt to prohibit Googlebot from access to admin areas or secret material, then confirm your configuration using Google Search Console tools.

- Manage Paywalled Content: Handle limited material by using schema markup and meta tags, therefore informing Google.

Regular monitoring and required changes help you to maintain a strong, search-friendly website that ranks highly in search engine results.

Author

-

We are a digital marketing agency with over 17 years of experience and a proven track record of helping businesses succeed. Our expertise spans businesses of all sizes, enabling them to grow their online presence and connect with new customers effectively. In addition to offering services like consulting, SEO, social media marketing, web design, and web development, we pride ourselves on conducting thorough research on top companies and various industries. We compile this research into actionable insights and share it with our readers, providing valuable information in one convenient place rather than requiring them to visit multiple websites. As a team of passionate and experienced digital marketers, we are committed to helping businesses thrive and empowering our readers with knowledge and strategies for success.

View all posts